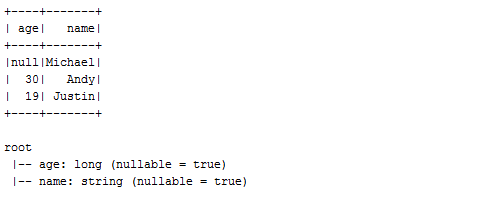

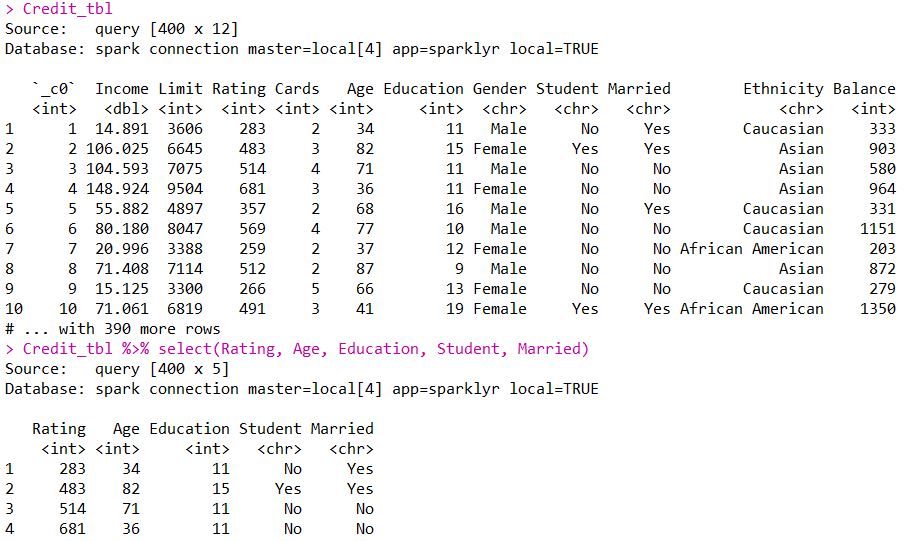

the only fully featured - RDD level API, MLlib, GraphX, etc.I started to use Spark more than 2 years ago (and used it a lot). It is written in Scala, but also has java, python and recently R APIs. But 2-3 years ago things changed - thanks to Apache Spark with its concise (but powerful!) functional-style API. A few years ago this also meant that you also would have to be a good java programmer to work in such environment - even simple word count program took several dozens of lines of code. Nowadays working with “big data” almost always means working with hadoop ecosystem.

Disclaimer: originally I planned to write post about R functions/packages which allow to read data from hdfs (with benchmarks), but in the end it became more like an overview of SparkR capabilities.

0 kommentar(er)

0 kommentar(er)